Why permanent data cleansing is not the solution!

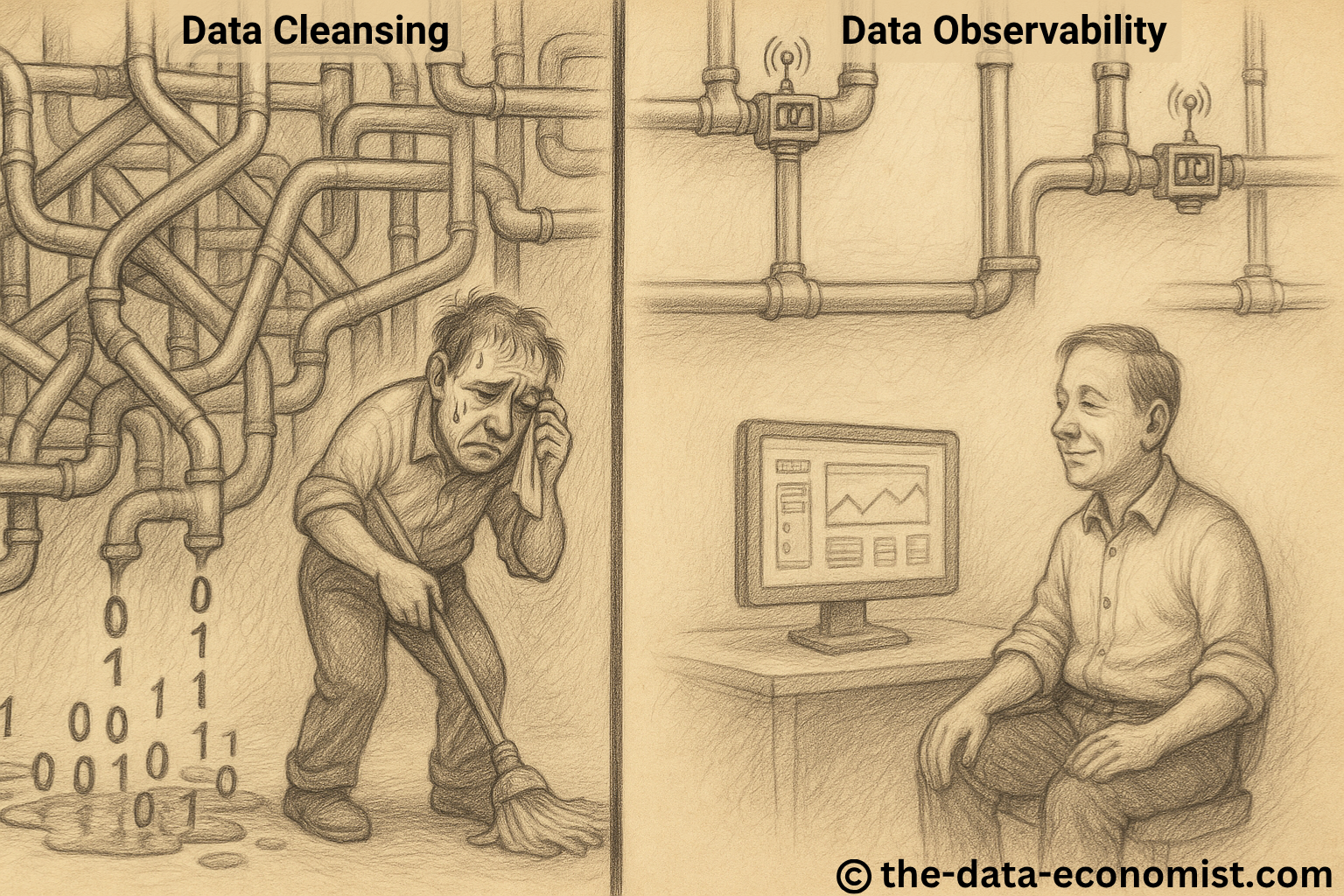

Data Cleansing vs. Data Observability

Data cleansing is not a solution, but rather a symptom of a poorly designed data strategy and inefficient, poorly functioning processes. Many companies invest considerable resources in continuous cleansing measures – without permanently eliminating the actual causes of poor data quality. But if you just keep cleaning without plugging the leak, you'll never be able to work dry.

Practical example: Data quality at sales partners

In reality, customer data collected and supplied by business partners often reveals serious quality issues. From my experience as Director of Data Governance & Strategy at a telecommunications company, I remember a data quality analysis that made it clear that the customer data supplied by our sales partners was significantly worse than that collected by our own sales team. This was particularly critical as a third of our revenue was generated through business partners – the quality of this data therefore had a direct impact on our business success. Despite high marketing budgets being allocated to these partners for customer acquisition, success was often lacking as the poor data quality significantly impaired the effectiveness of the campaigns. In addition, the constant data cleansing was very costly. We therefore sat down with our business partners and changed the contracts and incentive system so that the partners had a strong interest in delivering high data quality from the outset.

Why reactive action is not enough

The core problem is that data quality is too often treated reactively – similar to constantly mopping a flooded floor without repairing the broken pipe. Companies invest in expensive clean-up measures instead of addressing the root causes: flawed processes, unclear responsibilities, inadequate incentives. In addition, business partners usually feel no obligation to improve their data quality on their own initiative – because the costs and responsibility often remain with the company.

Incentive systems as a lever for change

An effective solution lies in redesigning partnership agreements: business partners should only be promoted to higher status levels (e.g. bronze, silver, gold, platinum) and given access to more attractive marketing budgets if they deliver high-quality customer data. In practice, this approach quickly led to a significant improvement in quality. The partners benefited from better results, while the company benefited from lower adjustment costs and more successful campaigns. The key here is to set incentives in such a way that they encourage a willingness to consistently improve the processes and structures from which the data originates.

Process design as the foundation of reliable data

Good data quality does not start with the data set, but with the process design. Poor data is often the result of poorly defined, incomplete or inefficient processes. Only when processes are designed to be clear, consistent and error-resistant from the outset can data be generated that companies can rely on. Conversely, poor data is also revealing: it shows that an underlying process is not working well – a valuable insight that can become the starting point for targeted improvements.

Data observability: transparency creates trust

Another key to sustainable data quality is the concept of data observability. It encompasses the continuous monitoring, management and maintenance of data across different processes, systems and pipelines to ensure its quality, availability and reliability. This continuous transparency acts as a strategic early warning system: If data arrives incomplete, late or in the wrong format, you can react immediately – before wrong decisions are made or systems are disrupted.

Data observability is essential, especially in combination with automated processes or AI-based applications. It helps to identify patterns of poor data quality, reveal structural causes and minimise operational risks at an early stage. This makes data observability the interface between technical precision and strategic control – enabling companies to use data as a real competitive factor.

Conclusion: Act on causes rather than symptoms

Permanent data cleansing is expensive, inefficient and does not solve the actual problem. Companies must view data quality as a core strategic task. This includes clearly defined responsibilities and, above all, targeted incentives that go beyond mere quality metrics and motivate improvements to the underlying processes and structures. Only such a cause-oriented approach can transform selective corrections into a holistic, data-oriented transformation – and thus create real business value in the long term.

More articles on related topics:

- Advisory | Impulse Talks | Trainings

- Data governance and artificial intelligence – the path to data-driven 360-degree customer service

- The future of data preparation with AI: a revolution in data analysisProcesses and data must go hand in hand

- Data governance: the sleeping giant securing our AI future

- Maximizing ROI in Data Driven with ARDPS

Data Quality Management, Data Management Strategy, Data Quality, Data Leadership, Data Cleansing, Data Observability

- Geändert am .

- Aufrufe: 1518